To make an AI chat bot behave, Kenyan workers say they were 'mentally scarred' by graphic text

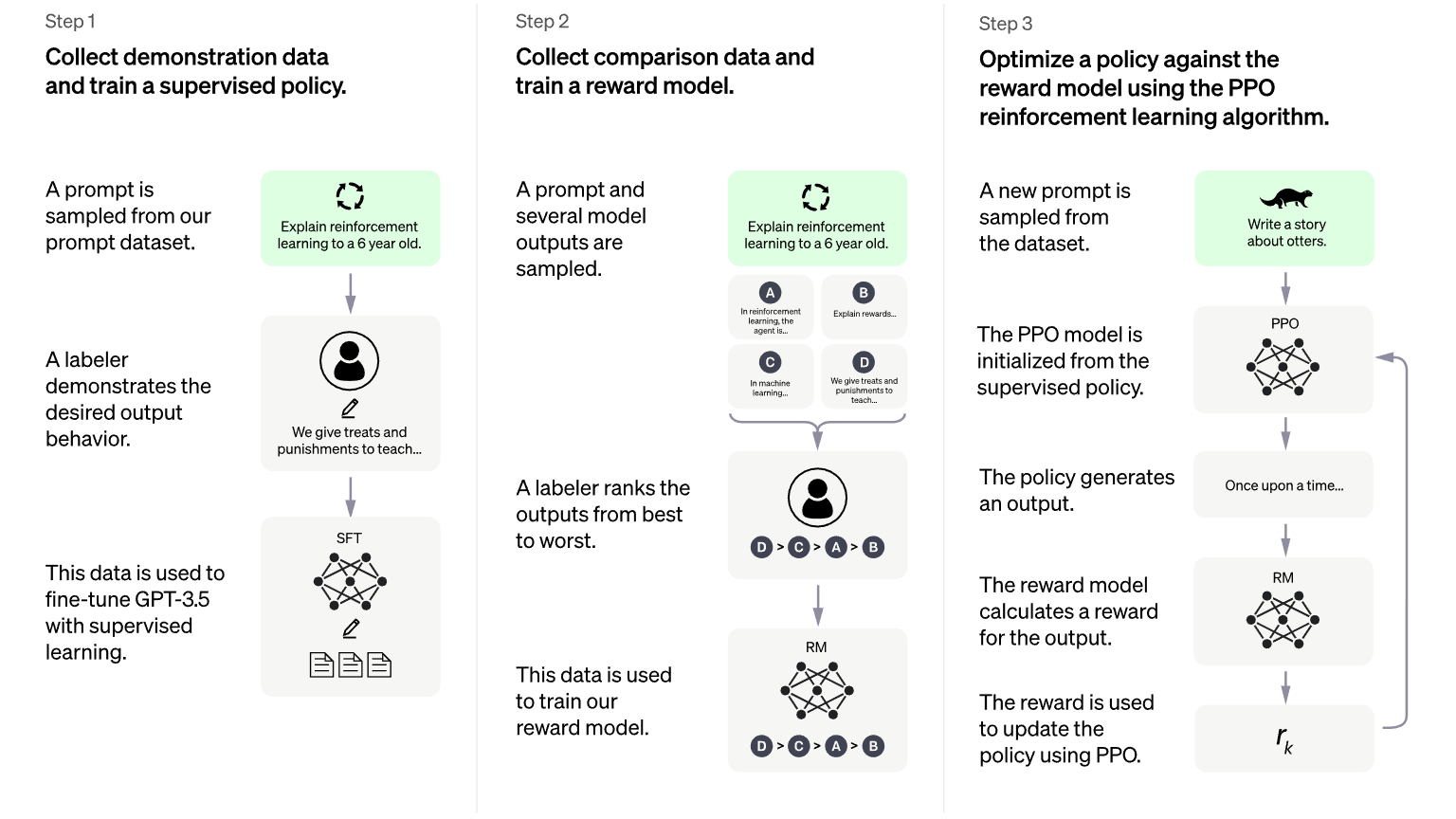

ChatGPT has impressed millions with its ability to string together coherent, sometimes even accurate, sentences, blurbs, scripts, and more. To write like a human, the AI bot was trained with machine learning algorithms on a massive catalogue of material scoured from the web. But the development of ChatGPT wasn't all automated: Human labour was required to stop ChatGPT falling into the same trap as its predecessor GPT-3, which was capable of making inappropriate, sometimes even racist, comments.

According to a recent investigation by Time, ChatGPT creator OpenAI outsourced this unsavory data processing task to Kenyan workers, many of whom reportedly earn less than $2 an hour.

ChatGPT is trained on datasets of such an immense size that they can't be closely curated by hand, as are image generation tools such as DALL-E (also operated by OpenAI), Stable Diffusion, and Midjourney. Without training, ChatGPT wouldn't work at all, but not all of the text you can find on the internet leads to the kind of comments you want your AI bot making.

The outsourced work involved labelling examples of the kind of offensive text that might show up in the training material. A collection of these labelled text samples was then fed into another AI, training it to notice and remove similar offensive text from ChatGPT's responses to users.

Training the AI to avoid inappropriate language and themes keeps ChatGPT cleaner and makes it harder to use to produce disturbing content. But in this effort to improve the bot, OpenAI exposed low-paid workers in Kenya to some of the worst material on the web.

"To get those labels, OpenAI sent tens of thousands of snippets of text to an outsourcing firm in Kenya, beginning in November 2021," Time reports. "Much of that text appeared to have been pulled from the darkest recesses of the internet. Some of it described situations in graphic detail like child sexual abuse, bestiality, murder, suicide, torture, self harm, and incest."

The Time report says that one worker suffered from recurring visions as a result of the content they encountered on the job. All four of the workers Time spoke to said they were "mentally scarred by the work."

There were reportedly around 36 workers employed to carry out the task on OpenAI's behalf, each expected to "read and label between 150 and 250 passages of text per nine-hour shift."

The company responsible for the outsourcing work is called Sama, a San Francisco-based firm with workers in Kenya, Uganda, and India. Time reports that OpenAI signed three contracts for the labelling work in late 2021, worth around $200,000 in total.

Sama says its employees had access to individual and group sessions with professional mental health therapists, accessible at any time. However, the workers spoken to by Time say only group sessions were available to them.

"Our mission is to ensure artificial general intelligence benefits all of humanity, and we work hard to build safe and useful AI systems that limit bias and harmful content," an OpenAI spokesperson told Time regarding the outsourced data processing work. "Classifying and filtering harmful [text and images] is a necessary step in minimizing the amount of violent and sexual content included in training data and creating tools that can detect harmful content."

According to Time, the nature of Sama's work for OpenAI took a different turn in February 2022 when it began collecting "sexual and violent images," some of which would be deemed illegal in the US. OpenAI said that labelling harmful images was "a necessary step" in making its tools safe to use, but that it never intended for the most extreme category of images to be collected by Sama and that this was a miscommunication.

Sama ultimately terminated its contract with OpenAI early. The report suggests that the Sama team raised concerns over the content of the images, which eventually led to the two companies' deal collapsing. In the aftermath, some of the Sama workers were moved to lower paying contracts or their positions terminated entirely. The full Time report goes into much greater detail on OpenAI's relationship with Sama.

OpenAI is currently valued in the billions of dollars. Microsoft is reportedly looking to sink more money into the AI firm, despite its own recent mass layoffs, and has announced plans to integrate OpenAI technologies into its services.

Moderation work has long involved some degree of human suffering: A report from 2019 on the mental wellbeing of employees of moderation teams used by Facebook described long-lasting trauma symptoms as a result of the work.

OpenAI's labelling needs are also a facet of a larger ethical crisis growing at the center of AI research: the problem of what to use as training material. Machines can't learn to behave like humans without human-made material, but not everyone wants their work to be fed to an algorithm, and last year artists started labelling their work "no AI" in an attempt to ward off companies gathering training data for image generators. Now here's the reverse problem: material that bot makers don't want influencing their AI. Again, the task of rearing respectful AI bots comes down to people, in this case workers paid to read the web's most disturbing content.